NY Times Connections Solver PT. 1

Olive Oil:⌗

During my daily morning ritual of waking up at 4:30 AM and immediately ingesting a ½ cup of [Trader Joes Extra Virgin Spanish Olive Oil] (Sponsored link), I decided I’d like to try and solve the NY Time’s Connections. So, with greasy fingers, lets begin.

This is part one, where I:

- Review the rules of Connections

- Gather historical Connections games into a structured dataset

- Come up with some strategy for a program that’s somewhat capable of playing the game well

In part two, I’ll try and generate a solver for the game. I am not an expert on NLP, nor do I know much about word embeddings, nor do I know much about word embeddings given context, but I’m quite curious to learn. Here in part one, I’m going to nail out something semi-interesting and treat part two as a learning experience.

Overview/Definitions:⌗

Connections is one of many NY Times games. It began in June 2023 and releases a puzzle daily. Outside of drinking straight oil, it’s one of my favorite things to do in the morning… so why not ruin it by figuring out a way to convert all historical games into a table and write SQL against it!

Connections is quite simple. You’re provided a 4x4 grid of word tiles. Words are grouped into sets of four based on a connecting category of varying difficulty. I’ll write about some patterns I discovered in the categories later.

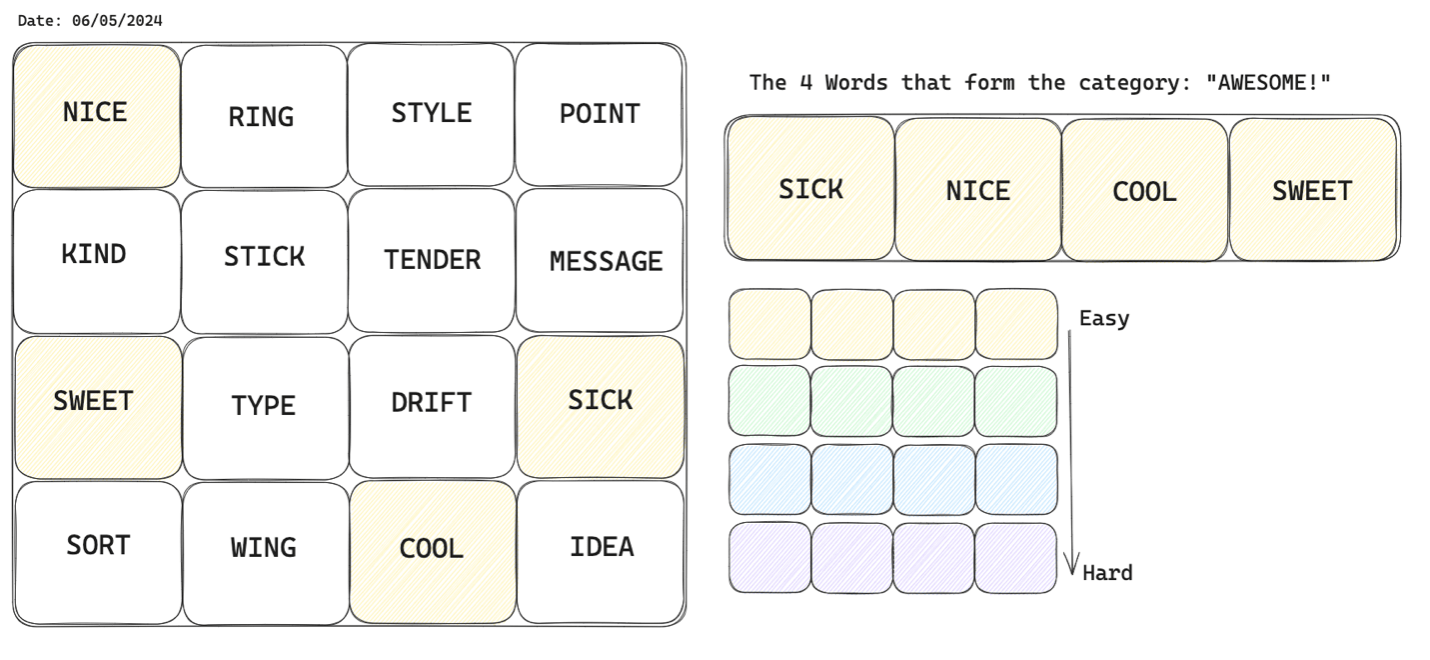

Above is a picture containing the Connections game from 6/05/2024. Funny enough, this particular game so-happens to contain the most common word used across the history of connections which is

Ringused, now, 11 times.

In this example, the four words “Sick”, “Nice”, “Cool”, and “Sweet” can be used to form the “Awesome!” category. This category was designated the “easiest” of all categories, although this definition differs from person to person as not everyone would associate Sick as a synonym for “Awesome!”. Personally, I associate sick with what happens after I drink my ½ cup of oil which, in my opinion, is pretty awesome… so, for me, it makes sense, but you do you. Rinse and repeat with the remaining 12 words, and that’s Connections.

Defining a few things to stay on the same page moving forward:

- Category: A grouping of 4 words in a game

- Words: A single tile in a game

- Difficulty: How “Straightforward (easy)” or “Tricky (hard)” a category was in a game.

How does ChatGPT-4o Do?⌗

I’ll start with a single days example from June 8th , 2024. This example aligns with a status quo Connections game, you’ll see why I say this in the analysis section.

**SPOILER ALERT** Below is the solution to the puzzle with the difficulty, category and words.

- [Easiest] Grab One’s Attention: ABSORB, ENGROSS, HOLD, RIVET

- [Easy] Document with Video: FILM, RECORD, SHOOT, TAPE

- [Medium] ANAGRAMS: PASTEL, PETALS, PLEATS, STAPLE

- [Hard] PRETTY ___: GOOD, PENNY, PLEASE, WOMAN

I made sure that the model is familiar with the game; however, at this point, I don’t offer it any previous connections data or answers to go off of.

Prompt:⌗

"Solve today’s game, providing the category defining each word grouping.

Words: Pleats, Please, Hold, Record, Woman, Film, Good, Pastel, Penny,

Staple, Tape, Rivet, Absorb, Petals, Engross, Shoot."

Response:⌗

- Words related to movies: Shoot, Film, Tape, Record

[CORRECT] - Words related to types of fasteners: Rivet, Staple, Pleats, Hold

[WRONG], but I also considered this possibility - Words related to artistic or aesthetic qualities: Pastel, Penny, Woman, Good

[WRONG]but close - Words related to engagement or involvement: Absorb, Please, Petals, Engross

[WRONG]

When provided limited context or historical data, the LLM typically gets the two easier categories right (some cluster of closely related words). However, without any additional context, the model tends to miss the harder categories sometimes even letting mistakes spill back into the easier ones causing additional misses. This isn’t news to anybody, but illustrates that better prompts get better responses… if you’re going to go Oscar the Grouch mode (his birthday was June 1 BTW) expect a garbage in -> garbage out scenario.

Let’s provide an additional prompt denoting that the possibility of certain categories exists.

Prompt:⌗

“These are incorrect, take into account that answers can involve grouping words

that have similar meaning with the addition of a given prefix or suffix, homophones,

and anagrams can all be at play to create a category. Please try again.”

Response:⌗

- Recording-related terms: Record, Tape, Film, Shoot

[CORRECT] - Anagrams of ‘Staple’: Pleats, Pastel, Staple, Petals

[CORRECT] - Words that can follow ‘Please’: Please, Hold, Absorb, Engross

[WRONG]. The words almost form the easiest category, but it forces the prefix concept unnecessarily. - Terms associated with monetary or value: Penny, Good, Woman, Rivet (stretch, but possibly in terms of securing value)

[WRONG]Switching “Rivet” and “Please” would yield a correct puzzle, but the categories are off significantly. It evens notes “Rivet” is a stretch.

While this attempt recognizes the anagram, the “Pretty ___ “ category seems to be fairly elusive for it and there is no idea of “Attention” in any category.

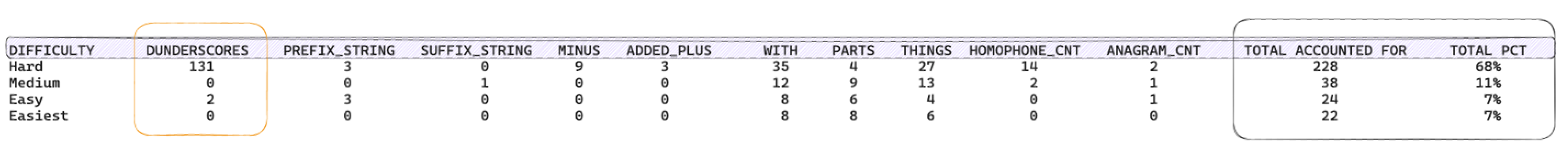

Final time… I provided a month’s worth of historical connections games with the categories, their associated difficulty and the four words. This, initially, caused it to regress significantly, providing an output that no longer followed the rules of the game. After re-orienting back to the task on hand, it butchered its previous progress and… umm… gave a response that added “Woman” to a category called “Objects”…

After providing thirty Connections puzzles, I found it was possible for the model to get them correct but only on easier days and after several prompts. If the model receives an extra hint, such as “I’m sure these three words together are in category “X”, but I don’t know the fourth word,” it usually manages to provide a correct answer.

There are probably better prompts, more prompts, or a curated LLM that could accurately solve most of these puzzles. There is certainly a good amount to explore in prompting an LLM and trying to get it to solve Connections.

Retrieving historical Connections games:⌗

I figured a good place to start would be to create a table containing all historical connections games properly labeled with words, categories, difficulty, and date. This dataset would serve as the basis for understanding common patterns in Connections, as well as a clean set of labeled training data I could use for whatever solution I envisioned.

While the NY Times has a Wordle Archive, there is no such Connections archive. Luckily, there are a myriad of websites that host all historical connections games.

So, I LOCKED IN and spent ~5 days copying…

Nah… actually, I sent a couple cold emails to any address I could find on these sites asking if they would share a file containing the games and answers. No response. I did a quick search of Github and Reddit to see if I could uncover any leads, but no… ok sooooo web-scraping, Yay!

I wrote a quick script to scrape one of the historical Connections sites into a text file. It quickly loops through each month of connections data on the site, extracts the paragraphs with the games and writes them to a text file with a separator. I’m going to leave the URL out, but you can find the site quite easily if you look. After writing 335 connections games to the text file, I convert it to a csv and remove duplicates. Now, nobody needs to scrape. They can just steal from me.

This is probably a good time to point out that this whole process should be a DAG, but I dont care about robustness or repeatability right now so… shortcuts!

Analytics (Cleaning):⌗

Cool, so now that we have our “Clean” CSV, we can copy it directly into a table using duckDB and explore all these historical games. With duckDB you can directly COPY a CSV from disk into a table in a database. Ten lines of code later, and we’ve got a table.

In our new table:

- Each game consists of 16 rows, one for each word

- A category, on a particular day, can be described with four rows

I choose this because I didn’t want to deal with any unnesting logic for viewing individual words, which would be necessary if I collapsed a game into 4 rows and saved the words in an ARRAY/LIST or just LISTAGG’ed the strings together.

FYI, duckDB adopted the SQL standard of 1 based indexing a couple years ago.

You may have noticed I put “CLEAN” in quotes earlier. I’ve got some half-baked web-scraping and transform logic I cheffed up in ~ 1 hour, so I figured there was about a 1% chance I missed some stuff… and it turns out I did indeed miss some stuff.

Date-category combinations should have four rows. A count of rows (CNT) divided by four should return 1, and then, 1 mod 1 should equal zero. However, the below query returned six instances where CNT equaled 1.25. I discovered hyphenated words like “Yo-Yo” were written as two rows.

Alright, probably good but let’s run some summary stats to be sure:

Oops, why is TOTAL_DISTINCT_DAYS 334.5?

- I assumed every game was unique

- There was some boiler plate text in the text file from the web scrape that was duplicated

- So… why not remove rows in the text file that were duplicates of a previously seen row to clean it up?

- Wrong! Connections reuses categories with identical words:

- August 30th, 2023, and May 30th, 2024: “Influence” category.

- January 11th, 2024, July 25th, 2023, and August 15th, 2023: “States of matter” category.

Instead of fixing this in the extract code, I just stripped them out of the table and called the clean_table funtion at the end of pipeline.py. Sweet, clean CSV, no quotes this time.

- I also removed 4/1/2024, April Fools day, when all words were Emojis ¯\_(ツ)_/¯

Analytics (insights):⌗

I hacked together a few queries in a Jupyter notebook style file, printing data frames to the console. Over the +/- 335 games in this dataset there were:

- ~3500 distinct words, not many words have spaces (fewer than 30 occurences), and most words are 4 to 5 letters.

- Word length is consistent across difficulties on average 5.1 letters

- Most commonly reused words: {RING:11, BALL:10, COPY:9, LEAD:9, WING:8, JACK:8, CUT:8, HEART:8}

- Shortest words are individial letters like “X”, occuring only twelve times

- Longest words include Peppermint Patty, Concentration, Mashed Potato, and Horsefeathers. Horsefeathers is synonymous with nonsense or rubbish (I could see myself yelling this in an argument)

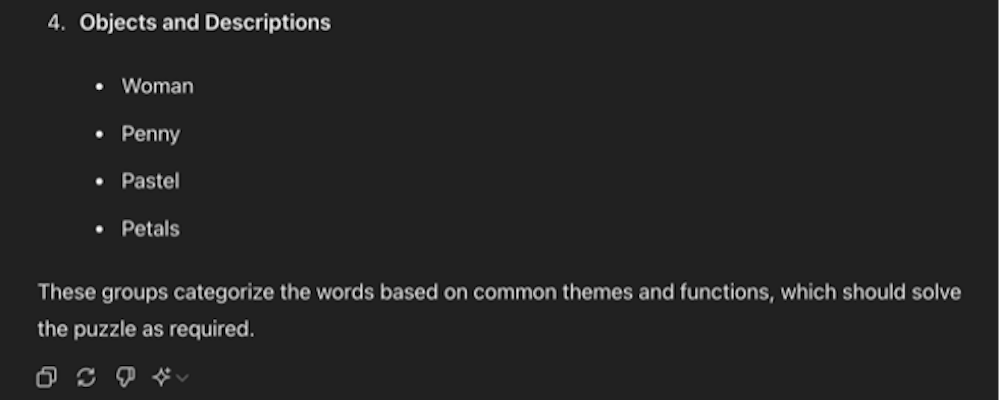

Pivoting out difficulty by category type:

Unpacking a few things:

-

This is biased towards the “Hard” categories, describing ~70% of them. This is due to this category being pattern focused and based on challenging word play each day. The only way I’m going to be able to solve these is to reduce the degrees of freedom in selection or determine the pattern with high confidence.

Dunderscores are extremely common, describing ~40% of hard categories: - ROCK ___: (Star, Candy, Bottom, Garden) - ___ MOON: (Sailor, New, Blue, Harvest) Homophones show up a few times, and I should probably just do a static check for these against a database of English language homophones. Anagrams should be checked similarly. -

Prefix_String, Suffix_String, Minus, Added_Plus all denote occasions where the words are similar due to having some character(s) appended or removed.

-

WITH categories are a significant challenge. I’m not sure how to approach them yet.

Things With Trunks: (Cars, Elephants, Swimmers, Trees) Tarot Carods With “THE”: (Fool, Lovers, Magician, Tower) -

THINGS overlaps WITH 11 times in the data, so I could have included the intersection of the two: THINGS WITH ___. Instead, I’ve just pulled the intersections into the WITH category.

Things People Shake: (Hands, Maraca, Polaroid, Snowglobe) Things to crack: (Egg, Knuckle, Smile, Window) -

Medium is typically some niche knowledge like countries with red and white flags, weapons in the game Clue, or British cuisine. To some, this is akin to “Easy” if they are familiar with the terms. For the less familiar, these can be confounding and lead to misses.

-

Easiest/Easy difficulties are generally tightly related words without requiring a layer of context. However, they aren’t always so straightforward.

Connect: (Couple, Link, Tie, Join) Attach with adhesive: (Adhere, Glue, Paste, Stick)

Filtering for the triple dunderscore categories was a bit tricky, with _ being reserved. In Redshift, I was able to do LIKE ‘%\\\\\_\_\_%’ , but this doesn’t seem to work in duckDB. Instead, I went with some length based logic checking for at least a difference of 2 characters once stripping them out:

Final thoughts on a solution:⌗

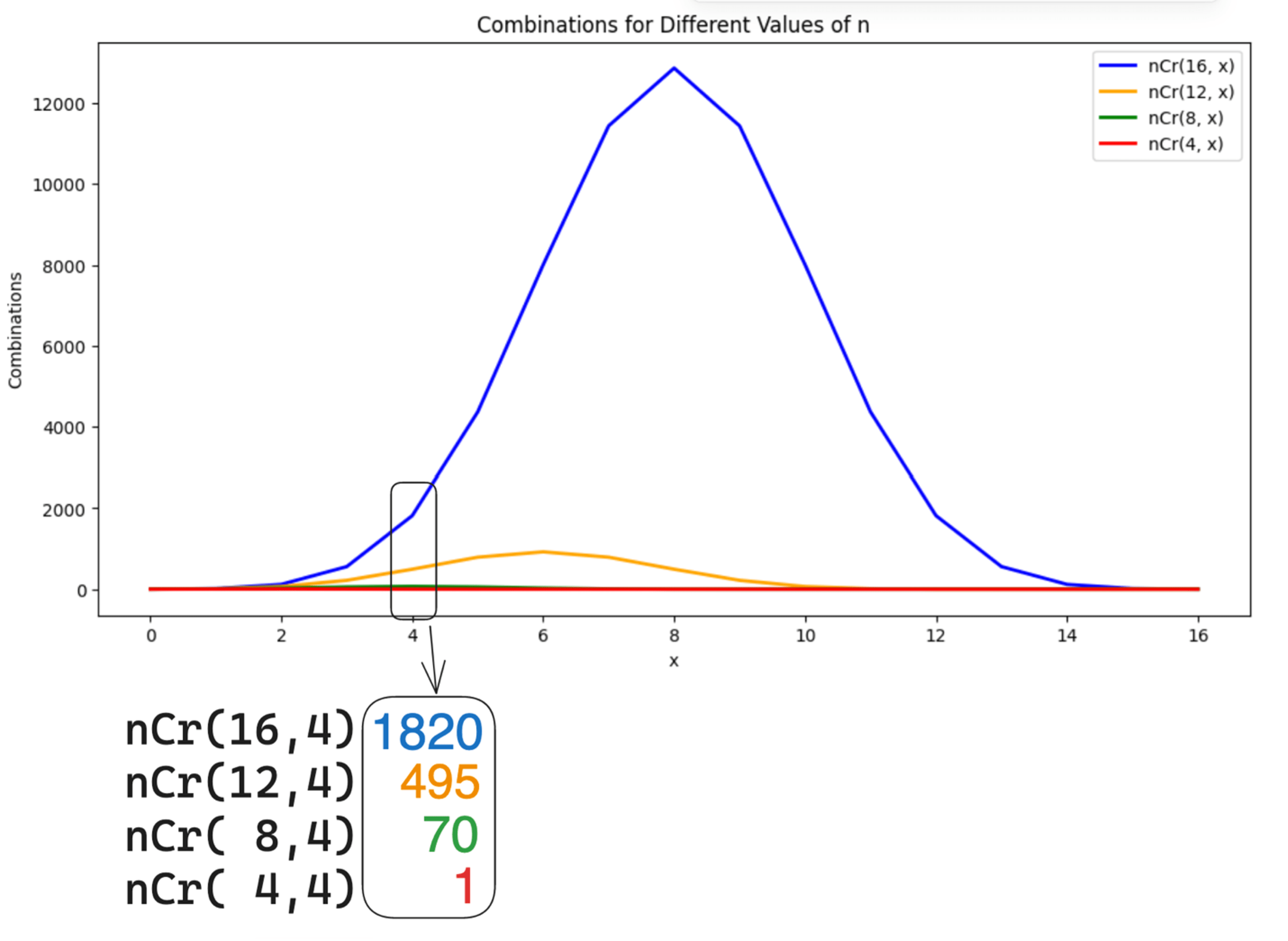

One advantage of this problem is that its surface area decreases exponentially with the reduction in the size of the initial set. Something I never explicitly provided Chat-GPT was information regarding which categories it had gotten right and what was wrong. Allowing it to build on top of past decisions, and reducing the size of the problem.

For combinations, the formula: C(n,r)=n!/r!(n-r)!

Evaluates the number of ways a sample of “r” elements can be obtained from a larger set of “n” distinguishable objects where order does not matter (unlike permutations) and repetitions are not allowed.

At the beginning of any Connections game, a player will choose 4 words from a set of 16 yielding 1820 possible combinations.

- C(16,4)=16!/4!(16-4) = 1820

As categories are found and labeled as correct the set is reduced: 16->12->8->4

- C(12,4)=12!/4!(12-4) = 495

- C(8,4)=8!/4!(8-4) = 70

- C(4,4)=4!/4!(4-4) = 1

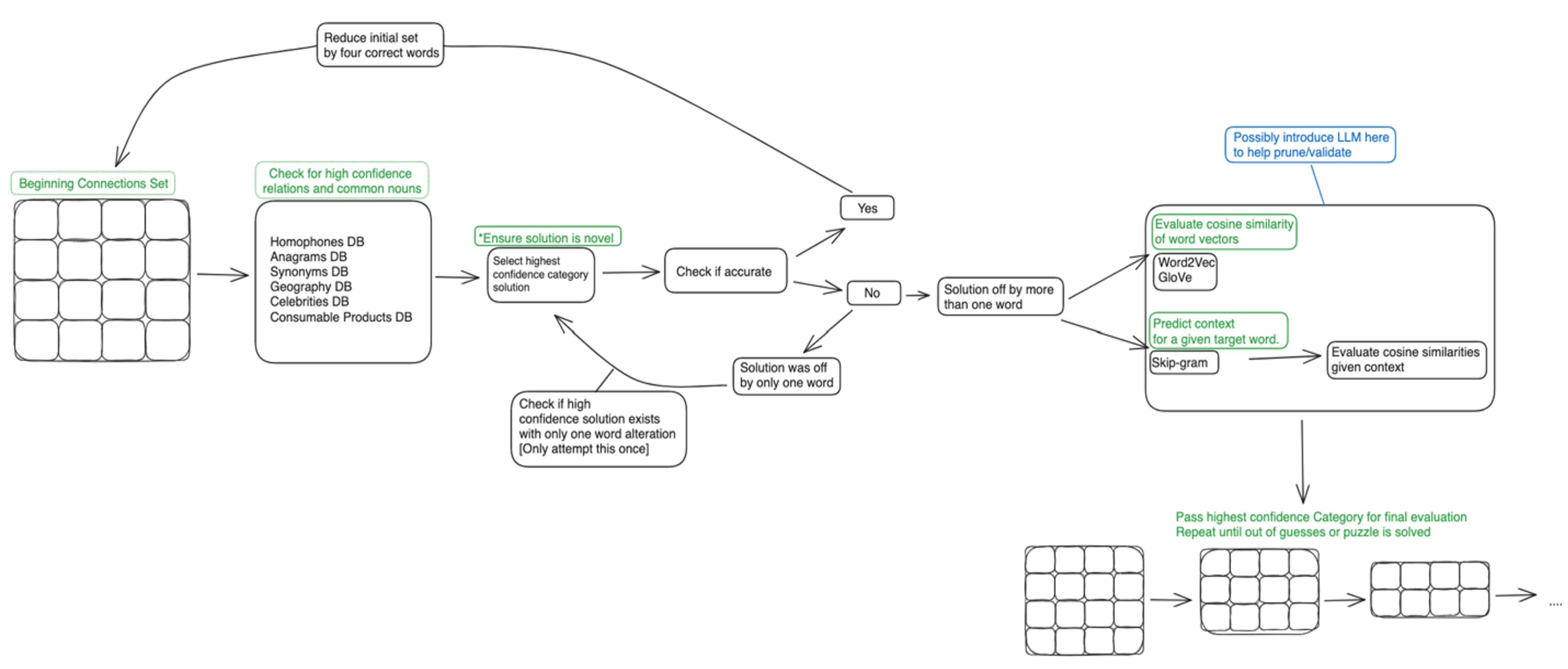

Like I mentioned in the intro, I find NLP interesting, but I am by no means an expert. After my manual checks to catch some of the tricky categories in Connections, I’m not entirely sure what an optimal framework looks like for grouping four words and iterating over a solution:

- Maybe I don’t even need to perform these manual checks if I provide the right context to the correct algorithm?

- I’ve noted “confidence” several times in the diagram, but I’m not sure how to objectively arrive at this or if I’ll need to normalize “confidence” across different categories/difficulties?

- I’ll want to operate on a reduced set of words given that a category has correctly been identified, but I’m not sure if I should throw out previous work to avoid any noise created by the larger set of words?

- I’ll need to figure out how to handle Nouns, single letters, and words with spaces (Maybe this requires injecting a word classification layer between the raw set of words and the cached check for specific categories)

- Not sure I’ll be able to get this category: Reads the Same Rotated 180 degrees: 96, MOW, NOON, SIS

Anyway, that’s part one. Who knows what part two will be, stay greasy!